症状

生产环境业务页面打不开,看错误日志是 OutOfDirectMemoryError (OOM)了,详情报错信息如下:

2022-03-21 06:00:00.541 [,] [tafprx-asyrecv_9] ERROR r.c.p.Operators - [error,314] - Operator called default onErrorDropped

java.lang.OutOfMemoryError: Direct buffer memory

at java.base/java.nio.Bits.reserveMemory(Bits.java:175)

at java.base/java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:118)

at java.base/java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:317)

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:632)

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:607)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:202)

at io.netty.buffer.PoolArena.tcacheAllocateSmall(PoolArena.java:172)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:134)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:126)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:395)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:178)

at io.netty.buffer.AbstractByteBufAllocator.buffer(AbstractByteBufAllocator.java:115)

at org.springframework.core.io.buffer.NettyDataBufferFactory.allocateBuffer(NettyDataBufferFactory.java:71)

at org.springframework.core.io.buffer.NettyDataBufferFactory.allocateBuffer(NettyDataBufferFactory.java:39)

at org.springframework.http.codec.json.AbstractJackson2Encoder.encodeValue(AbstractJackson2Encoder.java:236)

at org.springframework.http.codec.json.AbstractJackson2Encoder.lambda$encode$0(AbstractJackson2Encoder.java:150)

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:113)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1815)

at reactor.core.publisher.MonoCompletionStage.lambda$subscribe$0(MonoCompletionStage.java:82)

at java.base/java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:859)

at java.base/java.util.concurrent.CompletableFuture.uniWhenCompleteStage(CompletableFuture.java:883)

at java.base/java.util.concurrent.CompletableFuture.whenComplete(CompletableFuture.java:2251)

at java.base/java.util.concurrent.CompletableFuture.whenComplete(CompletableFuture.java:143)

at reactor.core.publisher.MonoCompletionStage.subscribe(MonoCompletionStage.java:57)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:157)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1815)

at reactor.core.publisher.MonoFlatMap$FlatMapInner.onNext(MonoFlatMap.java:249)

at reactor.core.publisher.FluxOnErrorResume$ResumeSubscriber.onNext(FluxOnErrorResume.java:79)

at reactor.core.publisher.FluxPeek$PeekSubscriber.onNext(FluxPeek.java:199)

at reactor.core.publisher.FluxPeek$PeekSubscriber.onNext(FluxPeek.java:199)

at reactor.core.publisher.MonoIgnoreThen$ThenIgnoreMain.complete(MonoIgnoreThen.java:284)

at reactor.core.publisher.MonoIgnoreThen$ThenIgnoreMain.onNext(MonoIgnoreThen.java:187)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1815)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:151)

根据报错的堆栈,找到 AbstractJackson2Encoder:236 行,调试发现框架使用的buffer在最后都进行了释放,看着没问题。

JDK版本

openjdk version "11.0.4" 2019-07-16 LTS

OpenJDK Runtime Environment Corretto-11.0.4.11.1 (build 11.0.4+11-LTS)

OpenJDK 64-Bit Server VM Corretto-11.0.4.11.1 (build 11.0.4+11-LTS, mixed mode)Bluff@2015

JVM配置

-Dspring.profiles.active=prod -Dreactor.netty.http.server.accessLogEnabled=true \

-Xms6G -Xmx6G -XX:MaxDirectMemorySize=6G -XX:+HeapDumpOnOutOfMemoryError \

-XX:+UnlockExperimentalVMOptions -XX:+UseZGC

线上复现

堆外内存OOM还是第一次遇到,有点无从查起。想着先在线上看一下堆外内存的使用情况,在JVM中增加以下配置:

-XX:NativeMemoryTracking=detail

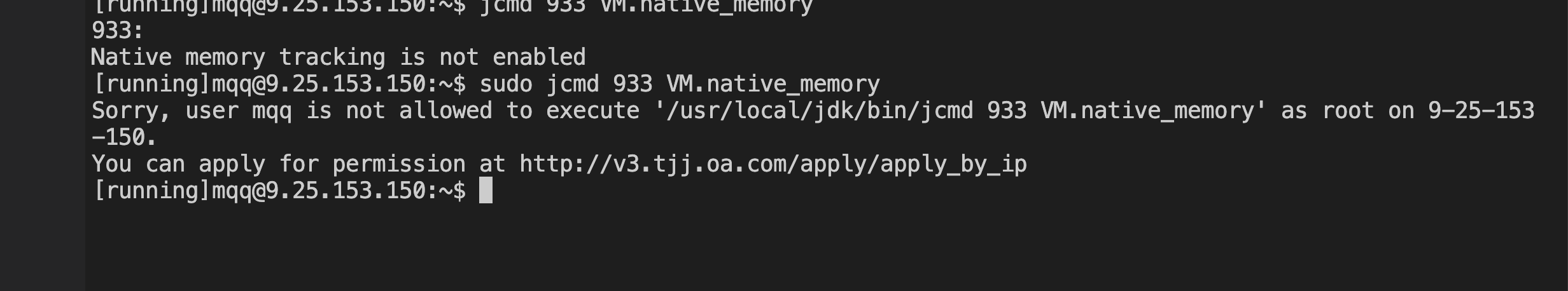

满心欢喜上机器上执行查看堆外内存的命令

jcmd 933 VM.native_memory

被现实当头一棒,没有权限获取内存使用信息,后来查了一下堆外内存由操作系统直接管理,要查看需要有root权限,此路不通。

本地复现

线上不行,只能本地进行复现了。项目使用的是SpringWebflux,通信框架使用的Netty,错误信息也描述的很详细,Netty喊着内存不够了。本地复现主要是控制堆外内存配置的小一点,方便问题出现。

配置验证使用的JVM参数

-DSpring.profiles.active=dev

-Dreactor.netty.http.server.accessLogEnabled=true

-Dlogback.path=/tmp

-XX:NativeMemoryTracking=detail

-Xms1G

-Xmx1G

-XX:MaxDirectMemorySize=10m

-Dio.netty.maxDirectMemory=204800 //给netty的内存小一点

--add-opens java.base/jdk.internal.misc=ALL-UNNAMED

-Dio.netty.tryReflectionSetAccessible=true

-XX:+HeapDumpOnOutOfMemoryError

-Dio.netty.leakDetection.level=advanced

-XX:+UnlockExperimentalVMOptions

发送请求查看日志

内存增加,在响应结束后内存并未减少,起初是使用 130k ,并发请求后,直接到 198k ,然后OOM了。

2022-03-30 20:10:29.320 [,] [pool-4-thread-1] INFO c.y.c.r.DirectMemReporter - [doReport,51] - netty_direct_memory size:133120b,130k, max:204800

io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 2048 byte(s) of direct memory (used: 202816, max: 204800)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:802)

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:731)

at io.netty.buffer.UnpooledUnsafeNoCleanerDirectByteBuf.allocateDirect(UnpooledUnsafeNoCleanerDirectByteBuf.java:30)

at io.netty.buffer.UnpooledDirectByteBuf.<init>(UnpooledDirectByteBuf.java:64)

at io.netty.buffer.UnpooledUnsafeDirectByteBuf.<init>(UnpooledUnsafeDirectByteBuf.java:41)

at io.netty.buffer.UnpooledUnsafeNoCleanerDirectByteBuf.<init>(UnpooledUnsafeNoCleanerDirectByteBuf.java:25)

at io.netty.buffer.UnsafeByteBufUtil.newUnsafeDirectByteBuf(UnsafeByteBufUtil.java:632)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:397)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:188)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:179)

at io.netty.channel.unix.PreferredDirectByteBufAllocator.ioBuffer(PreferredDirectByteBufAllocator.java:53)

at io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:120)

at io.netty.channel.kqueue.KQueueRecvByteAllocatorHandle.allocate(KQueueRecvByteAllocatorHandle.java:63)

at io.netty.channel.kqueue.AbstractKQueueStreamChannel$KQueueStreamUnsafe.readReady(AbstractKQueueStreamChannel.java:529)

at io.netty.channel.kqueue.AbstractKQueueChannel$AbstractKQueueUnsafe.readEOF(AbstractKQueueChannel.java:485)

at io.netty.channel.kqueue.KQueueEventLoop.processReady(KQueueEventLoop.java:213)

at io.netty.channel.kqueue.KQueueEventLoop.run(KQueueEventLoop.java:289)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.base/java.lang.Thread.run(Thread.java:834)

2022-03-30 20:09:09.813 [,] [pool-4-thread-1] INFO c.y.c.r.DirectMemReporter - [doReport,51] - netty_direct_memory size:202816b,198k, max:204800

难道netty真的没有进行回收?

增加监控(双管齐下)

明确有问题,考虑先把监控加到线上,这样可以及时发现问题并做相应(重启?哈哈)处理。

堆外监控代码上报

主要是获取到Netty中 PlatformDependent 类的 DIRECT_MEMORY_COUNTER 字段,这个字段记录了当前Netty使用的内存信息

public DirectMemReporter() {

Field field = ReflectionUtils.findField(PlatformDependent.class, "DIRECT_MEMORY_COUNTER");

assert field != null;

field.setAccessible(true);

try {

directMem = ((AtomicLong) field.get(PlatformDependent.class));

} catch (Exception e) {

log.error("got PlatformDependent.class DIRECT_MEMORY_COUNTER error", e);

}

}

private void doReport() {

try {

long size = directMem.get();

log.info("{} size:{}b,{}k, max:{}", BUSINESS_KEY, size, (int) (size / _1k),

PlatformDependent.maxDirectMemory());

} catch (Exception e) {

log.error("doReport error", e);

}

}

接入Premethues监控

@Override

public void bindTo(final MeterRegistry registry) {

Gauge.builder("netty.pooled.used.memory", this.metric, PooledByteBufAllocatorMetric::usedDirectMemory)

.tags("type", "direct")

.description("The used memory")

.baseUnit(BYTES_UNIT).register(registry);

Gauge.builder("netty.pooled.used.memory", this.metric, PooledByteBufAllocatorMetric::usedHeapMemory)

.tags("type", "heap")

.description("The used memory")

.baseUnit(BYTES_UNIT).register(registry);

}

new NettyPooledAllocatorMetrics(PooledByteBufAllocator.DEFAULT.metric()).bindTo(prometheusRegistry);

先看看监控

看监控信息,涨的还挺有节奏,这个和业务量有关,如果量再大问题就出现的更频繁了。

尝试解决,却都未解决

升级springboot版本

第一想到的升级版本,没有升级解决不了的,说整就整,springboot版本从2.5.2升级到2.6.3,测试后问题依旧。

让Netty使用堆内存

-Dio.netty.noPreferDirect=true

线上配置上线一台机器后,内存还在涨,无回收迹象(不是说使用的弱引用吗,GC也干不掉?)。

Redis的问题?

项目使用的是 Lettuce ,正常应该使用全异步方式进行代码编写,但是由于项目前期时间有限,且对异步编程认识有限,未敢尝试,现在心想着就都重新写成异步的方式吧。改造完成后,内存增加依旧,不过代码看起来更顺畅了。

其它无效尝试

尝试使用netty pooled分配

不行,不能使用 NettyCustomer ,还得看一下如何自定义,得深入Spring。

是Filter的问题?自定义Filter的方式不对?

参照Spring WebFlux Filters with Controllers and Functional Routers看了一下,Fiter处理的没问题。

netty启动报错

netty启动时报 direct buffer constructor: unavailable ,是因为JDK9之后分模块的问题,参照JDK从8升级到11时Netty报错增加模块权限即可,不影响Netty使用。

--add-opens java.base/jdk.internal.misc=ALL-UNNAMED -Dio.netty.tryReflectionSetAccessible=true

最终解决,代码问题

经过上述尝试后,开始梳理代码发现了问题所在。下面是一段操作web响应内容的 Fiter 类代码,主要功能是抽取返回值加上通用登录字段信息最终返回,dataBuffer在被抽取后没有被释放,导致内存泄漏。

@Override

public Mono<Void> writeWith(Publisher<? extends DataBuffer> body) {

final Flux<? extends DataBuffer> buffer = Flux.from(body);

return super.writeWith(buffer

.flatMap(dataBuffer -> {

String response = extractResponse(dataBuffer);

String result = response;

if (response.startsWith("{")) {

int isLogin = UserUtil.isLogin(appRequest.getUin()) ? 1 : 0;

String loginPrefix = "{\"isLogin\":" + isLogin + ",";

result = loginPrefix + response.substring(1);

HttpHeaders headers = getDelegate().getHeaders();

headers.setContentLength(headers.getContentLength() + loginPrefix.length() - 1);

}

setUserCookie();

return Mono.just(exchange.getResponse().bufferFactory().wrap(result.getBytes(StandardCharsets.UTF_8)));

})

.doOnNext(dataBuffer -> {

try {

final RLog rlog = buildRlog();

rlog.setResp(extractResponse(dataBuffer));

logService.accessLog(rlog);

} catch (Exception e) {

log.error("record access log error", e);

}

}));

}

进行修正

最终定位代码问题,长叹一声,改!增加 DataBufferUtils.release(dataBuffer) 方法对内存进行释放。

@Override

public Mono<Void> writeWith(Publisher<? extends DataBuffer> body) {

final Flux<? extends DataBuffer> buffer = Flux.from(body);

return super.writeWith(buffer

.flatMap(dataBuffer -> {

String response = extractResponse(dataBuffer);

DataBufferUtils.release(dataBuffer); //增加的内存释放代码

String result = response;

if (response.startsWith("{")) {

int isLogin = UserUtil.isLogin(appRequest.getUin()) ? 1 : 0;

String loginPrefix = "{\"isLogin\":" + isLogin + ",";

result = loginPrefix + response.substring(1);

HttpHeaders headers = getDelegate().getHeaders();

headers.setContentLength(headers.getContentLength() + loginPrefix.length() - 1);

}

setUserCookie();

return Mono.just(exchange.getResponse().bufferFactory().wrap(result.getBytes(StandardCharsets.UTF_8)));

})

.doOnNext(dataBuffer -> {

try {

final RLog rlog = buildRlog();

rlog.setResp(extractResponse(dataBuffer));

logService.accessLog(rlog);

} catch (Exception e) {

log.error("record access log error", e);

}

}));

}

问题修复一天后的监控

经过一天的观察,内存使用保持在1个G左右,问题解决,长出一口气。

排查问题中产生的疑问

在排查过程中有一些疑问未解,在此记录一下:

- Netty配置使用堆内存,有内存泄漏,在JVM Full GC时会不会回收掉?

- 默认lettuce使用是异步操作,为什么使用同步的redisTemplate也可以呢?

总结

此次排查耗时一周多,费心费力,中间还是走了一些弯路的,还好学习到很多,也深深意识到自己基础知识的不足,主要还是对netty、reactor和springwebflux认识有限,后续要深入学习一下。

参考

命中 SpringCloudGateway 组件 BUG | 彩虹马的博客

netty堆外内存监控_colie_li的博客-CSDN博客_netty内存监控

JDK从8升级到11时Netty报错 - 身处寒夜,把握星光。

Spring WebFlux Filters with Controllers and Functional Routers - amitph